Allow Google Into Password Protected Areas

I have many clients that have password protected portions of their website. They love that they can charge users to access content but still allow Google into their website to index it. How does the PHP script know if it's Google accessing the site? By checking the user agent, of course.

The PHP

$allow_inside = ($is_logged_in) || substr_count($_SERVER['HTTP_USER_AGENT'],'Googlebot');

The above code checks to see if the user is logged in OR if the user agent is "Googlebot." So simple!

The one disclaimer I give is that this isn't the most secure way to do things. Firefox has a plugin that allows the user to set their user agent at will. This is a risk that the customer is willing to take.

You sure as hell wouldn't want to protect sensitive information in this fashion. Exclusive news articles? Sure, why not? The exposure on Google is likely worth a few hacks getting in. I also use this for the website's built-in search engine.

![9 Mind-Blowing WebGL Demos]()

As much as developers now loathe Flash, we're still playing a bit of catch up to natively duplicate the animation capabilities that Adobe's old technology provided us. Of course we have canvas, an awesome technology, one which I highlighted 9 mind-blowing demos. Another technology available...

![How I Stopped WordPress Comment Spam]()

I love almost every part of being a tech blogger: learning, preaching, bantering, researching. The one part about blogging that I absolutely loathe: dealing with SPAM comments. For the past two years, my blog has registered 8,000+ SPAM comments per day. PER DAY. Bloating my database...

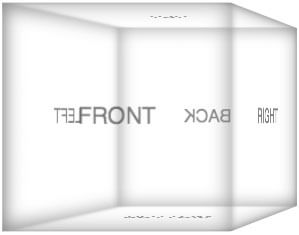

![Create a CSS Cube]()

CSS cubes really showcase what CSS has become over the years, evolving from simple color and dimension directives to a language capable of creating deep, creative visuals. Add animation and you've got something really neat. Unfortunately each CSS cube tutorial I've read is a bit...

![CSS Kwicks]()

One of the effects that made me excited about client side and JavaScript was the Kwicks effect. Take a list of items and react to them accordingly when hovered. Simple, sweet. The effect was originally created with JavaScript but come five years later, our...

If you wish to give Google access to the restricted areas of your site for adsense purposes Google has the tools.

Go to Adsense, Adsense Setup, then Site Authentication.

From here you can give the Google bot POST username/password text that it can use to log in and index for the purpose of serving ads. This doesn’t help for getting content into the main index but is nice if you want relevent ads for your subscription material.

Like David has said. If it is indexed it can be seen. So this is why google doesn’t offer this service for regular indexing.

Hi David

To me, this defeats the whole purpose of protecting the area in the first place. First, as you said, because the user agent can be easily faked. Secondly, and most importantly (because you don’t need advanced knowledge to fake the user agent), if Google can read it than it will be accessible to the whole Internet by using the Google cache feature…

Which effectively makes the information public.

@Adrian & Mark: I agree 100% that this isn’t a great practice. I’ve told my customers that it’s a bad idea. I’ve even sat down and showed them how it can be beat (Google “cache” feature, user agent switcher…). They still wanted it and I had my orders.

I use this the most with the website’s internal search engine. Unauthorized users will get kicked out but the search engine continues to index content.

If I use Google Analytics and part of my site is password protected with privet client information, does the standard code default to not track what is in the password protected part of the site and to what extent? Do you know where Google explains this on their site?

Thanks

How about doing a reverse dns check in addition to checking the user agent. That will not be perfect but it will block or confuse the novice hack.

http://googlewebmastercentral.blogspot.com/2006/09/how-to-verify-googlebot.html

That small bit of code would render your entire login system pointless to individuals who know about you utilizing this technique. All I would need to do is mask the user agent (using CURL, for example) to show “Googlebot” and I’m in and have access to everything.

A bad idea is an understatement.

Hi, Where would I put this code to use it?

Thanks,

Baz

a better method is to only allow the ip’s of the spiderbots,

you can always get an updated list here:

http://www.iplists.com/

Don’t forget to ask the owner for permission ;)

To check if the user agent is Googlebot is in most cases enough. 99.99% of people are not hackers and if you give access to logged users, they can share the content of your webpage anyway…

Does it matter where on the page I place this code? Thanks BTW! Sorry if this is a noob question!

Thanks for this bit!

@Jacob: Try place it near the top (before the tag is possible) as when a page is being loaded it goes from top to bottom and so before the rest of the content is loaded they are removed from the page and shown another!

Thought the code you posted above is very insecure, this can be done securely and sensibly.

To beat users changing their user-agent, Google offers a way to check to see if an IP belongs to one of their bots. This will allow you to check to see if the user-agent is real, or forged by a user. Somebody posted this above, too.

http://googlewebmastercentral.blogspot.com/2006/09/how-to-verify-googlebot.html

As for cache, you can instruct Google not to cache your page content.

Can someone please help me get this setup on my wordpress blog? I have been laboring at it, and paying programmers who can’t get it done. It sounds so easy! If anyone gets this please email me! jacob (- at – ) jumpmanual.com, I will pay to have it done, I don’t care if it is not secure it won’t be an issue for us.

Please help me :)

Thanks, and sorry to beg!

Nice for posting the php code here. It’s difficult for me to formulate this code. Thanks for posting this here.

What about inserting the code temporarily to get your pages indexed and pulling up in search and boosting traffic? Once you are satisfied you can remove the code. Having a start-up I’m willing to try anything but would probably prefer some feedback first. I would imagine that your pages would eventually leave the index but the spike may help get traffic and buzz for you. I’m sure not too many people would know how to get in it otherwise anyway.

Or you could always put an excerpt, that way you have better SEO and the bulk of the data is still hidden away.

Yeah, probably going to do that to every page soon. I used the same one for every page and I’m starting to think that was a mistake. I just assumed a quick site summary was sufficient to entice clicks but do you think that will work against me as far as SEO goes? Google is failing in my opinion for what keywords are “found.” (so far)

@ExperienceHTPC content is king. Unfortunately, we’re trying to hide it here. If you can provide a ‘mini-post’ or even a paragraph, that would be much better than just simply a few tags in my opinion.

Well, I’ll do my best. Everything was hand-coded in Dreamweaver so every little feature is a serious pain in the butt as far as the membership pages go. I’ll start with the meta description until I figure out a better way. Hopefully that will pop with Google.

A really useful article, more so from the collection of comments that have been added since. I personally would use a combination of:

1. User Agent verification (as per your suggestion)

2. IP address check (as per QWAXYS’ suggestion)

3. Brandon’s recommendation for instructing Google not to cache the page content

Unfortunately you would never be able to stop someone signing up to the restricted content and then copying and pasting it on another site. But then they would be infringing copyright and any necessary action can be taken.

A final step 4. could be added here, put a unique string somewhere within your content that you can search for regularly to ensure content isn’t being copied.

Saw some replies about how to do this in WordPress so I thought I’d chip in. After a lot of searching I was finally able to do this securely with a plugin called MemberPress. They use the reverse DNS lookup method to ensure the bot is actually from Google, Bing, MSN, or Yahoo. They also add tags in the head to tell search engines not to cache the protected pages either…neat plugin.