Fixing Coercion, Not The Symptoms

TL;DR

Your complaints of x == y behaviors being weird, buggy, or downright broken have all blamed == as the culprit. No, it's really not. == is pretty helpful, actually.

The problems you're having are not with the == operator itself, but with the underlying values and how they coerce to different types, especially in the weird corner cases.

Instead of fixing your problems by avoiding == (and always using ===), we should focus our efforts on either avoiding—or fixing!—the corner case value coercions. Indeed, those are where all the WTFs really come from.

Quick Jump:

This post announces the release of the latest book in my You Don't Know JS book series, YDKJS: Types & Grammar, which can be read for free online!

Types & Grammar includes a Foreword by our own amazing David Walsh, and is also available for purchase through O'Reilly and other sellers, like Amazon. If there's any part of this post that you enjoy, check out Types & Grammar for lots more in-depth coverage of JS types, coercion, and grammar rules.

Warning: Herein lies a controversial, and really long, discussion that's likely to upset you. This post defends and endorses the oft-hated JavaScript coercion mechanism. Everything you've ever heard or felt about what's wrong with coercion is going to be challenged by what I lay out here. Make sure you set aside plenty of time to chew on this article.

Isn't Coercion Already Dead?

Why on earth am I talking about—let alone, defending and endorsing!—a mechanism that has been so universally panned as being awful, evil, magical, buggy, and poor language design? Hasn't the boat long since sailed? Haven't we all just moved on and left coercion in the dust? If Crockford says it's bad, then it must be.

Ummm... no. On the contrary, I think coercion hasn't ever been given a fair shot, because it's never been talked about or taught correctly. It's not surprising that you hate coercion when all you've ever seen of it is the completely wrong way to understand it.

For better or worse, nearly the entire reason for the Types & Grammar book, as well as many of my conference talks, is to make just this case.

But maybe I'm wasting my time trying to convince you. Maybe you'll never change your mind.

In fact, Mr. Crockford has something to say directly to that point:

Douglas Crockford - Are they gone? "The Better Parts", Nordic.js 2014

And the reason these things take a generation is because ultimately we do not change people's minds. We have to wait for the previous generation to retire or die before we can get critical mass on the next idea. So it's like we look around: "Are they gone?"

--Douglas Crockford

So, is he right? More pointedly, could coercion be "the next idea" that just hasn't had enough of the old static typing generation die off to be given a fair and objective examination?

I think maybe so.

Essentially, I've been looking around at coercion naysayers for years, asking, "Are they gone, yet?"

An Inconvenient Truth

"Do As I Say, Not As I Do."

Your parents told you that when you were a kid, and it annoyed you back then, didn't it? I bet it would annoy you today if someone in our profession held that stance.

So, when you hear Douglas Crockford speak negatively about coercion, you certainly assume that he similarly avoids using it in his own code. Right? Ummm... how do I put this? How do I break it to you?

Crockford uses coercions. There, I said it. Don't believe me?

// L292 - 294 of json2.js

for (i = 0; i < length; i += 1) {

partial[i] = str(i, value) || 'null';

}

Do you see the coercion? str(..) || 'null'. How does that work?

For the || operator, the first operand (str(..)) is implicitly coerced to boolean if it's not already one, and that true / false value is then used for the selection of either the first operand (str(..)) or the second ('null'). Read more about how || and && work and the common idiomatic uses of those operators.

In this case, the first operand is definitely not expected to be a boolean, as he earlier documents the str(..) function this way:

function str(key, holder) {

// Produce a string from holder[key].

..

So, his own code is relying on an implicit coercion here. The very thing he's spent a decade lecturing us is bad. And why? Why does he use it?

More importantly, why do you use such idioms? Because I know you do. Most JS devs use that || operator idiom for setting a default value to a variable. It's super useful.

He could have instead written that code as so:

tmp = str(i, value); partial[i] = (tmp !== '') ? tmp : 'null';

This avoids coercion entirely. The !== operator (in fact all the equality operators, including == and !=) always returns a boolean from the equality check. The ? : operator first checks the first operand, then picks either the second (tmp) or the third ('null'). No coercion.

So why doesn't he do this?

Because the str(..) || 'null' idiom is common, shorter/simpler to write (no need for a temporary variable tmp), and generally easy'ish to understand, certainly as compared to the non-coercion form.

In other words, coercion, especially implicit coercion, has usages where it actually improves the readability of our code.

OK, so that's just one isolated exception he made, right? Not quite.

In just that one "json2.js" file, here's a (not necessarily complete) list of places Crockford uses either explicit or implicit coercions: L234, L275, L293, L301-302, L310, L316-317, L328-329, L340-341, L391, L422, and L442.

Oh, wait. This is just the old "json2.js" library. That's unfair, right? How about his own JSLint library, which he still maintains (EDIT: he's soon releasing an update for ES6): L671, L675, L713, L724, L782, ... You get the point, right?

Doug Crockford uses coercion to make his code more readable. I applaud him for it.

Ignore what he says about coercion being evil or bad. It's useful, and he proves that with his code no matter what headline-grabbing slides he puts up in his conference talks.

But... == Is The Evil

OK, you're right, there's not a single instance of == in his code. And whenever he derides coercion, he's almost certainly talking about == specifically.

So am I being unfair by highlighting a bunch of non-== coercions? Actually, I'd argue it's he that's being unfair, by constantly equating == with coercion (pun intended, of course!). He's not alone. I'd say almost all JS developers do the same. When they hear "coercion", they inevitably invoke ==.

Coercion is a mechanism that is allowed to work when == is used, and prevented from being used when === is used. But that realization should make it clear that == and coercion are orthogonal concerns. In other words, you can have complaints about == that are separate from complaints about coercion itself.

I'm not just trying to nitpick here. This is super important to understand the rest of this post: we have to consider coercion separately from considering ==. Call == "equality coercion" if you like, but don't just conflate it with coercion itself.

By and large, I think almost all complaints made against == are actually issues with coercion, and we're going to get to those later. We're also going to come back to ==, and look at it a bit more. Keep reading!

Need Coercion?

Coercion is what happens in JavaScript when you need to go from one type (like string) to another (like boolean). This is not unique to JS though. Every programming language has values of different types, and most programs require you to convert from one to the other. In statically typed (type enforced) languages, conversion is often called "casting", and it's explicit. But the conversion happens nonetheless.

JavaScript coercion can either be intentional and explicit, or it can happen implicitly as a side-effect.

But there is just hardly any non-trivial JS programs out there that don't at some point or another rely on coercion of some form. When people hate on coercion, they're usually hating on implicit coercion, but explicit coercion is usually seen as OK.

var x = 42; var y = x + ""; // implicit coercion! y; // "42" var z = String(x); // explicit coercion! z; // "42"

Even for those who are publicly against implicit coercion, for some reason they're usually just fine with the x + "" form here. I don't frankly understand why this implicit coercion is OK and many others aren't.

You can focus on deciding whether you prefer explicit or implicit coercion forms, but you cannot reasonably argue that most JS programs can be written without any coercion at all.

An awful lot of developers say we shouldn't have coercion, but they almost never take the time to think through all the corner cases that would present. You can't just say the mechanism shouldn't exist without having an answer to what you should do instead.

This article in a sense is an exercise in that pursuit, to examine just how sensible such a position is. Hint: not much.

Why Coercion?

The case for coercion is much broader than I will fully lay out here. Check out Chapter 4 of Types & Grammar for a lot more detail, but let me try to briefly build on what we saw earlier.

In addition to the x || y (and x && y) idioms, which can be quite helpful in expressing logic in a simpler way than the x ? x : y form, there are other cases where coercion, even implicit coercion, is useful in improving the readability and understandability of our code.

// no coercion

if (x === 3 || x === "3") {

// do something

}

// explicit coercion

if (Number(x) == 3) {

// do something

}

// implicit coercion

if (x == 3) {

// do something

}

The first form of the conditional skirts coercion entirely. But it's also longer and more "complicated", and I would argue introduces extra details here which might very well be unnecessary.

If the intent of this code is to do something if x is the three value, regardless of if it's in its string form or number form, do we actually need to know that detail and think about it here? Kinda depends.

Often, no. Often, that fact will be an implementation detail that's been abstracted away into how x got set (from a web page form element, or a JSON response, or ...). We should leave it abstracted away, and use some coercion to simplify this code by upholding that abstraction.

So, is Number(x) == 3 better or worse than x == 3? In this very limited case, I'd say it's a toss-up. I wouldn't argue with those who prefer the explicit form over the implicit. But I kinda like the implicit form here.

Here's another example I like even more:

// no coercion

if (x === undefined || x === null) {

// do something

}

// implicit coercion

if (x == null) {

// do something

}

The implicit form works here because the specification says that null and undefined are coercively equal to each other, and to no other values in the language. That is, it's perfectly safe to treat undefined and null as indistinguishable, and indeed I would strongly recommend that.

The x == null test is completely safe from any other value that might be in x coercing to null, guaranteed by the spec. So, why not use the shorter form so that we abstract away this weird implementation detail of both undefined and null empty values?

Using === prevents you from being able to take advantage of all the benefits of coercion. And you've been told that's the answer to all coercion problems, right?

Here's a dirty secret: the <, <=, > and >= comparison operators, as well as the +, -, *, and / math operators, have no way to disable coercion. So, just simply using === doesn't even remotely fix all your problems, but it removes the really useful instances of the coercive equality == tool.

If you hate coercion, you've still got to contend with all the places where === can't help you. Or, you could embrace and learn to use coercion to your advantage, so that == helps you instead of giving you fits.

This post has a lot more to get to, so I'm not going to belabor any further the case for coercion and ==. Again, Chapter 4, Types & Grammar covers the topic in a lot more detail if you're interested.

A Tale Of Two Values

I've just extolled why coercion is so great. But we all know coercion has some ugly parts to it—there's no denying it. Let's get to the pain, which really is the whole point of this article.

I'm gonna make a perhaps dubious claim: the root of most evil in coercion is Number("") resulting in 0.

You may be surprised to see just how many other coercion cases come down to that one. Yeah, yeah, there are others, too. We'll get there.

I said this earlier, but it bears repeating: all languages have to deal with type conversions, and therefore all languages have to deal with corner cases producing weird results. Every single one.

// C

char s[] = "";

int num = atoi(s);

printf("%d",num); // 0

// Java

String s = "";

Integer num = Integer.valueOf(s);

System.out.println(num); // java.lang.NumberFormatException

C chooses to convert "" to 0. But Java complains and throws an exception. JavaScript is clearly not uniquely plagued by this question.

For better or worse, JavaScript had to make decisions for all these sorts of corner cases, and frankly, some of those decisions are the real source of our present troubles.

But in those decisions was an undeniable—and I think admirable—design philosophy. At least in the early days, JS chose to lean away from the "let's just throw an exception every time you do something weird" philosophy, which you get from languages like Java. That's the "garbage in, garbage out" mindset.

Put simply, JS tries to make the best guess it can of what you asked it to do. It only throws an error in the extreme cases where it couldn't come up with any reasonable behavior. And many other languages have chosen similar paths. JS is more like "garbage in, some recycled materials out".

So when JS was considering what to do with strings like "", " ", and "\n\n" when asked to coerce them to a number, it chose roughly: trim all whitespace; if only "" is left, return 0. JS doesn't throw exceptions all over the place, which is why today most JS code doesn't need try..catch wrapped around nearly every single statement. I think this was a good direction. It may be the main reason I like JS.

So, let's consider: is it reasonable for "" to become 0? Is your answer any different for " " or "\n\n"? If so, why, exactly? Is it weird that both "" and "0" coerce to the same 0 number? Eh. Seems fishy to me.

Let me ask the inverse question: would it be reasonable for String(0) to produce ""? Of course not, we'd clearly expect "0" there. Hmmm.

But what are the other possible behaviors? Should Number("") throw an exception (like Java)? Ugh, no. That intolerably violates the design philosophy. The only other sensible behavior I can conceive is for it to return NaN.

NaN shouldn't be thought of as "not a number"; most accurately, it's the invalid number state. Typically you get NaN from performing a math operation without the required value(s) being numbers (or number like), such as 42 / "abc". The symmetric reasoning from coercion fits perfectly: anything you try to coerce to a number that's not clearly a valid number representation should result in the invalid number NaN—indeed Number("I like maths") produces NaN.

I strongly believe Number("") should have resulted in NaN.

Coercing "" to NaN?

What if we could change just this one thing about JavaScript?

One of the common coercive equalities that creates havoc is the 0 == "" equality. And guess what? It comes directly from the fact that the == algorithm says, in this case, for "" to become a number (0 already is one), so it ends up as 0 == 0, which of course is true.

So, if "" instead coerced to the NaN number value instead of 0, the equality check would be 0 == NaN, which is of course false (because nothing is ever equal to NaN, not even itself!).

Here, you can see the basis for my overall thesis: the problem with 0 == "" is not the == itself—its behavior at least in this case is fairly sensible. No, the problem is with the Number("") coercion itself. Using === to avoid these cases is like putting a bandaid on your forehead to treat your headache.

You're just treating the symptom (albeit poorly!), not fixing the problem. Value coercion is the problem. So fix the problem. Leave == alone.

Crazy, you say? There's no way to fix Number("") producing 0. You're right, it would appear there's no way to do that, not without breaking millions of JavaScript programs. I have an idea, but we'll come back to that later. We have a lot more to explore to understand my larger point.

Array To String

What about 0 == []? That one seems strange, right? Those are clearly different values. And even if you were thinking truthy/falsy here, [] should be truthy and 0 should be falsy. So, WTF?

The == algorithm says if both operands are objects (objects, arrays, functions, etc), just do a reference comparison. [] == [] always fails since it's always two different array references. But if either operand is not an object but instead is a primitive, == tries to make both sides a primitive, and indeed primitives of the same type.

In other words, == prefers to compare values of the same type. That's quite sensible, I'd argue, because equating values of different types is nonsense. We developers also have that instinct, right? Apples and oranges and all that jazz.

So [] needs to become a primitive. [] becomes a string primitive by default, because it has no default to-number coercion. What string does it become? Here's another coercion I'd argue is busted by original design: String([]) is "".

For some reason, the default behavior of arrays is that they stringify to the string representation of their contents, only. If they have no contents, that just leaves "". Of course, it's more complicated than that, because null and undefined, if present in an array's values, also represent as "" rather than the much more sensible "null" and "undefined" we would expect.

Suffice it to say, stringification of arrays is pretty weird. What would I prefer? String([]) should be "[]". And btw, String([1,2,3]) should be "[1,2,3]", not just "1,2,3" like current behavior.

So, back to 0 == []. It becomes 0 == "", which we already addressed as broken and needing a fix job. If either String([]) or Number("") (or both!) were fixed, the craziness that is 0 == [] would go away. As would 0 == [0] and 0 == ["0"] and so on.

Again: == is not the problem, stringification of arrays is. Fix the problem, not the symptom. Leave == alone.

Note: The stringification of objects is also weird. String({ a: 42 }) produces "[object Object]" strangely, when {a:42} would make a lot more sense. We won't dive into this case any more here, since it's not typically associated with coercion problems. But it's a WTF nonetheless.

More Gotchas (That Aren't =='s Fault)

If you don't understand the == algorithm steps, I think you'd be well served to read them a couple of times for familiarity. I think you'll be surprised at how sensible == is.

One important point is that == only does a string comparison if both sides are either already strings, or become strings from an object coercing to a primitive. So 42 == "42" might feel like it's treated as "42" == "42", but in fact it's treated as 42 == 42.

Just like when your math teacher scolded you for getting the right answer for the wrong reason, you shouldn't be content to accidentally predict == behavior, but instead make sure you understand what it actually does.

What about many other comonly cited == gotchas?

false == "": Not as many of you will complain about this one. They're both falsy, so it's at least in the neighborhood of sensible. But actually, their falsiness is irrelevant. Both become numbers, the0value. We've already demonstrated what needs to change there.false == []: What?[]is truthy, how can it possibly be== false? Here, you're probably tempted to think[]should be coerced to atrue/false, but it's not. Instead,falsebecomes a number (0naturally), and so then it's0 == [], and we just saw that case in the previous section.Should we change

Number(false)from0toNaN(and, symmetrically,Number(true)toNaN)? Certainly if we're changingNumber("")toNaN, I could make that case. Especially since we can observeNumber(undefined)isNaN,Number({})isNaN, andNumber(function(){})isNaN. Consistency might be more important here?Or not. Strong tradition from the C language is for

falseto0, and the reverseBoolean(0)clearly should befalse. Guess this one is a toss-up.But either way,

false == []would be fixed if the other previously stated array stringification or empty string numeric issues were fixed![] == ![]: Nuts! How can something be equal to the negation of itself?Unfortunately, that's the wrong question. The

!happens before the==is even considered.!forces abooleancoercion (and flips its parity), so![]becomesfalse. Thus, this case is just[] == false, which we just addressed.

The Root Of All == Evils

OK, wait. Let's review for a moment.

We just zipped through a bunch of commonly cited == WTFs. You could keep looking for even more == weirdness, but it's pretty likely that you'd just end up back at one of these cases we just cited, or some variation thereof.

But the one thing all these cases have in common is that if Number("") was changed to NaN, they'd all magically be fixed. It all comes back to 0 == ""!!

Optionally, we could also fix String([]) to "[]" and Number(false) to NaN, for good measure. Or not. We could just fix 0 == "". Yes, I'm saying that virtually all of the frustrations around == actually stem from that one corner case, and furthermore basically have almost nothing to do with == itself.

Take a deep breath and let that sink in.

Adding To Our Frustrations

I really wish I could end the article here. But it's not so simple. Yes, fixing Number("") fixes pretty much all of == woes, but == is only one of the many places people trip over coercion in JS.

The next most common source of coercion issues comes when using the + operator. Again, we're going to see that the complaints are usually made against +, but in reality it's the underlying value coercions that are generally to blame.

Some people are quite bothered by the overloading of + to be both math addition and string concatenation. To be honest, I neither love nor hate this fact. It's fine to me, but I'd also be quite OK if we had a different operator. Alas, we don't, and probably never will.

Simply stated, + does string concatenation if either operand is a string. Otherwise, addition. If + is used with one or both operands not conforming to that rule, they're implicitly coerced to match the expected type (either string or number).

On the surface, it would seem, if for no other reason than consistency with ==, that + should concatenate only if both were already strings (no coercion). And by extension, you could say that it adds only if both operands were already numbers (no coercion).

But even if we did change + like that, it wouldn't address the corner cases of mixing two different types with +:

42 + ""; // "42" or 42? 41 + "1"; // "411" or 42?

What should + do here? Throwing an error is so Java. 1994 just called.

Is addition really more preferable than concatenation here, or vice versa? My guess is, most people prefer concatenation ("42") for the first operation, but addition (42) for the second. However, the inconsistency of that position is silly. The only sensible position is that either these operations must result in "42" and "411" (as currently) or 42 and 42 (as hypothesized).

Actually, as I argued earlier, if the first + is addition, that operation should result in NaN, not 42, as the "" must become NaN instead of 0. Would you still prefer NaN / 42 to "42" / "411", then? I doubt it.

I don't think there's a better behavior we could change + to.

So how do we explain + gotchas if it's not the + operator's fault? Just as before: value coercions!

For example:

null + 1; // 1 undefined + 1; // NaN

Before I explain, which of those two seems more sensible? I'd say without reservation that the second is vastly more reasonable than the first. Neither null nor undefined are numbers (nor strings), so + can't possibly be seen to be a valid operation with them.

In the two above + operations, none of the operands are strings, so they are both numeric additions. Furthermore, we see that Number(null) is 0 but Number(undefined) is NaN. We should fix one of these, so they're at least consistent, but which?

I strongly feel we should change Number(null) to be NaN.

Other Coercion WTFs

We've already highlighted the majority of cases you'll likely run into in everyday JS coding. We even ventured into some crazy niche corner cases which are popularly cited but which most developers rarely stumble on.

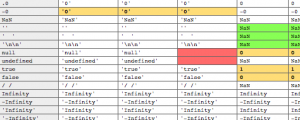

But in the interest of exhaustive completeness, I have compiled a huge gnarly table of a whole bunch of different corner-case'ish values and all the implicit and explicit coercions you can run them through. Grab a strong bottle of alcohol (or your own favorite coping mechanism) and dive in.

If you're looking for a case to criticize coercion, it (or its root) will almost certainly be found on that list. There's a few other surprises hiding in that table, but we've covered the ones you need to be worried about.

Can We Fix?

I've rambled at length both about why coercion is awesome and why it has issues. It's important to remember that from my perspective, the operators are not at fault, though they get all the negative attention.

The real blame lies with some of the value coercion rules. In fact, the root list of problems is rather short. If we fix them, they cascade out to fix a whole bunch of other non-root problems that trip developers up.

Let's recap the root problem value coercions we're concerned about:

Number("")is0Should be:

NaN(fixes most problems!)String([])is"",String([null])is"",String([undefined])is""Should be:

"[]","[null]","[undefined]"Number(false)is0,Number(true)is1Should be (optional/debatable):

NaN,NaNNumber(null)is0Should be:

NaN

OK, so what can we do to fix these problems (value coercions) instead of treating the symptoms (operators)?

I'll admit that there's no magic bullet that I can pull out. There's no trick (well... we could monkey-patch Array.prototype.toString() to fix those cases). There's no profound insight.

No, to fix these, we're going to have to brute force it.

Proposing to TC39 a straight-up change to any of these would fail at the first step. There's literally zero chance of that kind of proposal succeeding. But there's another way to introduce these changes, and it might, just might, have a tiny fraction of a % chance. Probably zero, but maybe it's like 1e-9.

"use proper";

Here's my idea. Let's introduce a new mode, switched on by the "use proper"; pragma (symmetrical to "use strict", "use asm", etc), which changes those value coercions to their proper behavior.

For example:

function foo(x) {

"use proper";

return x == 0;

}

foo(""); // false

foo([]); // false

foo(false); // false

foo("0"); // true

Do you see why this is different—and I'm arguing, better—than ===? Because we can still use == for safe coercions like "0" == 0, which the vast majority of us would say is still a sensible behavior.

Furthermore, all these corrections would be in effect:

"use proper";

Number(""); // NaN

Number(" "); // NaN

Number("\n\n"); // NaN

Number(true); // NaN

Number(false); // NaN

Number(null); // NaN

Number([]); // NaN

String([]); // "[]"

String([null]); // "[null]"

String([undefined]); // "[undefined]"

0 == false; // false

1 == true; // false

-1 < ""; // false

1 * ""; // NaN

1 + null; // NaN

You could still use === to totally disable all coercions, but "use proper" would make sure that all these pesky value coercions that have been plaguing your == and + operations are fixed, so you'd be free to use == without all the worry!

What Next?

The theoretical proposal I've just made, which likely has near zero chance of ever getting adopted even if I did formally propose it, doesn't seem like it leaves you with much practical take away from all this reading. But if enough of you latch onto the ideas here and help create momentum, it might have a remote chance.

But let me suggest a couple other possibilities, besides the standards track, to chew on:

"use proper"could be become a new transpile-to-JavaScript language ("ProperScript", "CoercionScript", etc), in the same spirit as TypeScript, Dart, SoundScript, etc. It could be a tool that transforms code by wrapping all value operations in runtime checks that enforce the new rules. We could lessen the obvious performance hit quite a bit by specifying annotations (again, TypeScript style) that hint the tool which operations it should wrap.- We could take these sets of desired new value coercion rules and turn them into assertions for a build-process that does simulated run-time checks (with test data) to "lint" your code, in a similar spirit to the RestrictMode project, one of my favorite sleeper projects. This tool would spit out warnings if it detects places in your code that expect coercion results that don't hold.

Awareness

Finally, let me just say that even if none of this proposal ever comes to pass, I believe there's still value to be gleaned from this article. By learning exactly what things are going wrong in your == and + operations—that is, the value coercion corner cases themselves—you're now empowered to write better, more robust code that robustly handles (or at least avoids) these cases.

I believe it's far healthier to be aware of the ins and outs of coercion, and use == and === responsibly and intentionally, than it is to just use === because it's easier not to think and not to learn.

If you take writing JS seriously, and I hope you do, isn't it worth your time to internalize this discipline? Won't that do more to improve your code than any blindly-applied linting rule ever will?

Don't forget to check out my You Don't Know JS book series, and specifically the YDKJS: Types & Grammar title, which can be read for free online or purchased through O'Reilly and other sellers.

About Kyle Simpson

Kyle Simpson is a web-oriented software engineer, widely acclaimed for his "You Don't Know JS" book series and nearly 1M hours viewed of his online courses. Kyle's superpower is asking better questions, who deeply believes in maximally using the minimally-necessary tools for any task. As a "human-centric technologist", he's passionate about bringing humans and technology together, evolving engineering organizations towards solving the right problems, in simpler ways. Kyle will always fight for the people behind the pixels.

One of the most valuable js article I’ve read here recently. Should be like handed out to people on the streets.

Took me a while to read it all, but it’s been an interesting insight. The coercion chart is

sweet, thank you Kyle.By the way, I was going to post a much longer comment, but it doesn’t allow me for some reason (got 403 – probably because of the content, but I have no clue of what that may be… not the ‘WTF’, I hope).

Awesome article and I love the idea of fixing Number(“”) and some others, but the number-to-boolean comparison results in “proper” mode seem very odd to me.

x = 1; if (x) { // will be reached } if (!x) { // won't be reached } if (x == true) { // won't be reached either ??? }Maybe the solution is to prefer coercion to boolean if one operand of == is boolean already?

@tkissing-

You have a good point. I’ll make the following observations (in no particular order of precedence):

x == trueorx == false, precisely because it looks like it should be doing aToBooleanbut it instead does aToNumberon both. If you use canonicalToBoolean, likeif (x) { .. }or even betterif (!!x) { .. }, you never have that problem. It has the advantage of being simpler and actually working.==algorithm that I dislike, it’s precisely this point, of how it just turns booleans into numbers. If I could change that, I would. But I think that change would be way more intrusive than what I’ve suggested with"use proper";, and thus way less likely to be plausible.Number(true)andNumber(boolean)to both beingNaNis, frankly, the weakest of my suggestions, and I wouldn’t be heartbroken at all if that didn’t make it in. :)I agree that

Number("") => NaNwould solve a lot of problems and I’d be ok to see this take over the classic coercion, even though I think I used it a couple of times (by the way, I usually prefer the unary+operator to convert to numbers). If there’s no number, it should be “not a number”, right? And I also agree thatNumber(null)should beNaN, it would only make sense. (Alsotypeof null === "object"is another WTF, but that’s another story.)But I’m against converting booleans to

NaN: they make sense as they are now. Because ifNumber(true) => NaN, then alsotrue != 1, which would lead to another WTF, sinceif (true)andif (1)both verify – unless it also holdsBoolean(1) => false, which would be another mess.And I’m also against converting empty arrays to

"[]", and the other proposed conversions.Array.prototype.toStringnormally behaves likejoin, nothing more, nothing less. I wouldn’t change that, unless we can reach the compromise thattoStringis exactly likejoinbut adding the opening and closing brackets.That would also imply+[] => NaN. Let’s leaveundefinedandnullto be converted to"".Who ever compares arrays to numbers, after all?! If it comes to that, you’ve probably done something wrong, in terms of good practices and/or code debugging.

> unless we can reach the compromise that toString is exactly like join but adding the opening and closing brackets.

I suppose that wouldn’t be terribly unreasonable. Except, I really think

join(..)is boneheaded.> Let’s leave undefined and null to be converted to “”.

Why on earth does it make sense for:

I’m sorry, but I just can’t accept that a

nullorundefinedvalue stringifies differently depending on whether it’s standalone or in an array. That’s just crazy.I understand your point of view. I _guess_ (but that’s pure speculation) it’s because it would be inconvenient to get a list of

undefinedin unfilled arrays (e.g.new Array(5)): that would have defeated the purpose ofjoin, which is to give a “nice” representation of the array.Then

toStringhas been defined as a clone ofjoinwith the default separator (which means that if you redefinejoin, thantoStringgets affected too).(I just noticed that putting an object created with

Object.create(null)in an array causes aTypeErrorwhen callingtoStringorjoin. Cool.)I don’t think it would have been difficult at all for

toString/jointo distinguish between an actualundefinedin the array and an “implied” one from an empty slot. Consider:var a = [1,2,,undefined,5]; a.toString = function() { var ret = ""; for (var i=0; i

Thanks for this great article.

The biggest pitfall that I regularly do wrong is with defaults of (numeric) configuration, like

This works fine. Until someday someone wants to configure a margin of zero. Really frustrating.

I like the idea of “use proper;” but I don’t think it can work that way, as it introduces backward incompatibilities (unlike “use strict”). When opened on an older browser which does not recognize “use proper”, your code will be executed with the old behavior, which can cause wrong behavior…

Yeah, I can see that being common, and unfortunately my current ideas don’t really anything to change that.

Well,

"use strict";does something similar. For example:function doSomethingToObj(x) { "use strict"; if (this !== undefined) { this.x = x; return this; } } var o1 = {}; var o2 = null; o1 = doSomethingToObj.call( o1, 42); // in ES5 strict mode, this will throw, // but in pre-ES5 will silently "fail" by using // window instead of null o2 = doSomethingToObj.call( o2, "hello" );Of course, the

"use strict";differences were a lot more subtle and thus a lot less likely to cause breakage if you relied on their behavior. But it definitely did introduce potential “non-backwards compatible” changes.Moreover, I’d say that this

"use proper";feature would require tooling to bridge from ES6+ into whatever ES** it made it into, in the same way that ES6 features need tools like BableJS to bridge into today’s ES5 browsers. I hinted in the article at a couple of different ways that such tooling could go.Every once in a while you want someone to come and ruffle up some discussion on things that the community considers closed and completed. It’s good to read some counter arguments and gain greater insight and perspective in our craft. Thanks for the article. It was very well written

I really appreciate your love for understanding JavaScript. I have read your book series ‘You Don’t Know JS’ and found them to be incredibly valuable and even inspirational. Where the hell have you been all my life? :) BTW, I think ‘Doug’ is a much better, more appropriate first name for Mr. Crockford.

Great sensei Kyle Simpson!

Thanks for efforts in standarize th coersive world!

For ES6 harmony release what’s the status ?

Maybe one should take into consideration the original purpose of JavaScript, especialy for handling and processing user input. In fact it is quite remarkable how they came up with such a simple but versatile solution in such a short time, using some rather unfamiliar base concepts like associative arrays and prototyping. Other languages that perform type coercion (like PHP and VB) all have their own quirks and oddities, because as you said before, there is no golden bullet. Anyway, thanks for this great article and that gnarly but very useful table showing both regular and edge cases.

No, “use proper” will never happen, but what might happen some day is if TC39 finally add some meta-programming features to the language and allow us to redefine coercions and operators. Maybe along with some more comprehensive “domain” support so we can limit our overrides to our own code.

This article provides a lot of insight into type coercion but I still can’t get on board.

In your conclusion you suggest that JavaScript programmers should internalize the difference between == and === and not just write off == completely. I agree that this is a great and necessary step to understand the language.

One could, however, also suggest that programmers learn to read code that uses a few extra bytes and is more explicit with its intent.

Arguably explicit coercion would be no more telling than implicit coercion were JavaScript the only language, but many programmers who end out looking at JavaScript did not come from JavaScript.

It’s not that implicit coercion is evil, it’s that explicit coercion will make sense to a broader audience.

i.e. If you can tell the same story but have it make sense to more people, why wouldn’t you? It’s not like we’re saving significant bytes here.