JPEG Compression with Guetzli

A little while ago Google released its Guetzli JPEG encoder, which claims a 20-30% improvement in file size over libjpeg. Being intrigued, I decided to give it a go. My tool of choice for optimizing JPEGs has long been jpeg-recompress, one of the binaries available in the jpeg-archive project. It's highly configurable, reasonably fast, and really delivers on optimizing JPEGs. But how does Guetzli compare?

A rudimentary test case

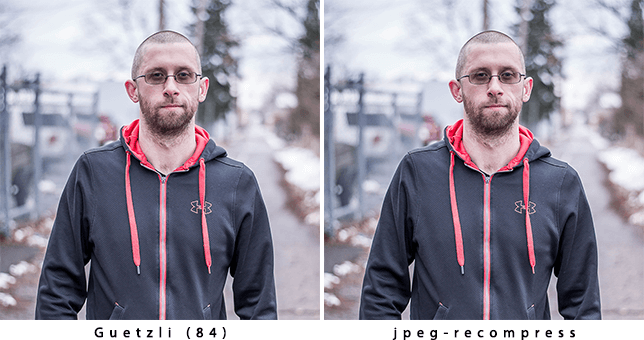

To get started, I used a portrait of myself:

What you see above is a much smaller version of the the unoptimized JPEG source I worked with. The original image is 2560x2561, and weighs in at 861 KB. When I processed this image with Guetzli, I used the following command:

guetzli unoptimized.jpg guetzli.jpg

This took a while. Quite a while, in fact. This isn't a surprise, though, considering that Google explains in the project readme that you should expect about a minute of CPU time for every megapixel (my test image was 2.5 megapixels). When Guetzli finished, the output file's size was 770 KB. About 11% smaller than the source. Not bad. Them I ran jpeg-recompress on the unoptimized source using this command:

jpeg-recompress --accurate --strip unoptimized.jpg guetzli.jpg

jpeg-recompress fared a bit better for me with an output file size of 662 KB, about 23% smaller than the unoptimized source, and 15% smaller than the Guetzli-optimized version. Does this mean that jpeg-recompress is the undisputed winner? It really depends. The way jpeg-recompress works is by iterating over an image within a default quality range of 40 to 95 to find the best compromise between quality and file size. You can specify the amount of loops, but the default (which is usually sufficient) is 6. When optimizing my portrait JPEG, jpeg-recompress landed on a quality of 80. Guetzli's default quality setting is 95. So what happens if I set Guetzli to a comparable setting? Unfortunately, I couldn't. Guetzli doesn't allow you to specify a quality setting less than 84. So to make things a little more comparable to the jpeg-recompress output, I went with the minimally allowed setting of 84:

guetzli --quality 84 unoptimized.jpg guetzli-q84.jpg

The final result? After a (long) wait, the Guetzli-optimized version at a quality setting of 84 was 636 KB. 26 KB smaller than jpeg-recompress at a quality setting of 80. How does Guetzli stack up to the competition when it comes to visual quality? In the case of my portrait image, both optimized versions are nearly indistinguishable.

Of course, this is just a one-off example. What does Guetzli performance look like across a whole set of images? Let's take a look!

Guetzli performance across a set of images

A single test case of a couple image optimizers can be useful, but really only provides a narrow view of performance. If we want to get a broader sense of how well an optimizer works, we should test it across a large set of images. I used several image optimizers on a set of nearly 500 unoptimized images. These images were of various foods. The type of imagery you'd be used to seeing on a recipe website. I used the find command to process the image batch (using the technique I described in this blog post). The unoptimized payload of these images was 37,388 kilobytes. In each case, I aimed for a target quality of 84, the lowest quality allowed by the Guetzli encoder. I also used the time command to get a sense of how long each optimizer takes. Below are the results showing the command used for each optimizer, the time each one took to process the batch of unoptimized images, and the cumulative size of the optimized output:

| Optimizer Command | Time | Size (KB) |

|---|---|---|

| (Unoptimized) | n/a | 37,388 |

jpegoptim -m84 -s --all-progressive |

16s | 16,136 |

jpeg-recompress -n 84 -x 84 -a -s |

4m 45s | 15,320 |

guetzli --quality 84 |

150m 41s | 13,636 |

cjpeg -optimize -quality 84 -progressive |

38s | 15,364 |

Note: The cjpeg binary used is from mozjpeg 3.1

Now for the takeaway: Guetzli beats out all of the other optimizers at the lowest allowable quality setting of 84, but it takes a brutal amount of time to do so. While most optimizers will get reasonably close, they can do so several orders of magnitude faster.

Moreover, a minimum quality setting of 84 isn't as low as we should be aiming for when it comes to web imagery. We have to treat image quality as a subjective issue, because any number of variables are involved in how the user assess image quality. What about viewing distance, physical device size, or screen resolution? The rules are simple: If you can't tell the difference between an unoptimized image and optimized one, your users won't be able to. If you can tell the difference, that's still no guarantee that your users will be able discern its quality, because they likely won't have a higher version of the same image for reference.

When running jpeg-recompress with a specified quality range of 30-70 across the same set of images, I was able to achieve an output size of 11,114 KB. That's another couple megabytes below the best that Guetzli could do. Even though the output quality was lower, the results were still acceptable (albeit subjectively so).

Another thing to consider is that Guetzli cannot encode progressive JPEGs. Progressive JPEGs are usually a smidge smaller than baseline JPEGs. Better yet, because of how progressive JPEGs load, they minimize layout shifting. Less layout shifting means less page re-rendering. Less rendering means less device battery consumed for processing.

While Guetzli is highly effective in situations where quality is the paramount concern, I personally prefer other optimizers. While Guetzli handily outdoes the competition at comparable quality settings, it doesn't allow you to optimize images at a setting lower than 84. Because of this limitation, how incredibly CPU-intensive it is, and its lack of progressive JPEG support, I don't feel comfortable recommending it. Don't take my word for it, though. Check out this list of articles about Guetzli and form your own opinions:

- Announcing Guetzli: A New Open Source JPEG Encoder

- Google Releases Guetzli Open Source JPEG Encoder Generating 20 to 35% Smaller Files Compared to Libjpeg

- Guetzli, Google's New JPEG Encoder

However you decide to optimize your images, realize that something is always better than nothing. No matter which optimizer you go with, you're doing right by your users, and that's really what matters.

About Jeremy Wagner

Jeremy is a web developer living and working in the frozen tundra of Minneapolis-Saint Paul. He's the author of the Manning Publications book Web Performance in Action, a web developer's guide to building fast websites. You can find him on Twitter @malchata, or read his ramblings at https://jeremywagner.me.

People who tested it say that CPU load and memory usage are very significant. Also, compression of ultra HD images gets ~30% better. Smaller images get only 20% better compression or below.

Haven’t tested Guetzli, but I know for sure that mozjpeg compresses JPEG images up to 90% without losing quality.

I have written a blog post about it here: https://butcaru.com/nodejs-image-compression-tool/

When I’ve got some spare time, I’ll do a comparision about the two solutions. I’m curious about the results :)

Just another article that I write a week ago to add to your list of links. I did a handful of tests with different types of images. http://developer.telerik.com/content-types/opinion/can-googles-guetzli-jpeg-encoder-help-solve-web-page-bloat/

We developed an online tool that automatically does the job, based on Guetzli algorithm. We’d be glad it you give it a try? thanks! :)

Here it is: http://www.ishrinker.com

wanna try without install? try https://store.docker.com/community/images/fabiang/guetzli

Hi Jeremy, we thought it might be useful to have an online tool that automatically does the job for free. For that we created iShrinker, based on Guetzli algorithm. Would you mind giving it a try? :) thanks!

Here it is: http://www.ishrinker.com

If I think it beside the MozJPEG then It is focused on image quality and sacrifices encoding time, on the order of 800-1000x slower than MozJPEG.

Ah, thanks for this. More comprehensive than my little foray:

http://www.earth.org.uk/note-on-site-technicals-4.html#guetzli

One of those ‘later’ tools, along with brotli for me, though I am a fan of zopfli and zopflipng for the matching use cases!

Rgds

Damon

At our company, we render a lot of images a day. We wanted to experiment with Guetzli and we found a solution to distribute (and therefore a-sync) the guetzli process. Have a read here: https://techlab.bol.com/from-a-crazy-hackathon-idea-to-an-empty-queue/

gutzli is slow, but is good for edge case jpeg, ie with sharp edges (like comics and images with text).

and may by, we get big speedup:

https://github.com/google/guetzli/pull/227

If I think it beside the MozJPEG then It is focused on image quality and sacrifices encoding time, on the order of 800-1000x slower than MozJPEG.

Haven’t tested Guetzli, but I know for sure that mozjpeg compresses JPEG images up to 90% without losing quality.

When running jpeg-recompress with a specified quality range of 30-70 across the same set of images, I was able to achieve an output size of 11,114 KB

great description.

https://www.clippingpathuniverse.com/how-to-photograph-earrings-for-etsy/

While I haven’t personally tried Guetzli, I can confirm that mozjpeg is quite effective at compressing JPEG images by up to 90% without sacrificing image quality.